| Input & Movement > Custom InputAction Mapping |

While the VRSimulator has defined a rather comprehensive set of default Input Actions for you, there may be situations where you wish to either:

Using C# scripting and the InputAction, InputAxis, and InputButton classes (and their derivatives) you can do all three of the items above.

|

Be Careful! |

|

We consider Custom InputAction Mapping to be the most complicated type of integration with the VRSimulator. Because it directly relates to the inputs that will be captured from your users, mistakes, bugs, inefficiencies, etc. made here are likely to fundamentally destabilize your user experience. Proceed at your own risk. |

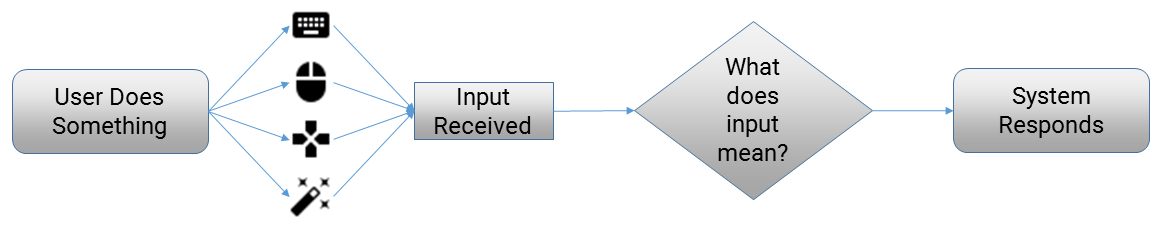

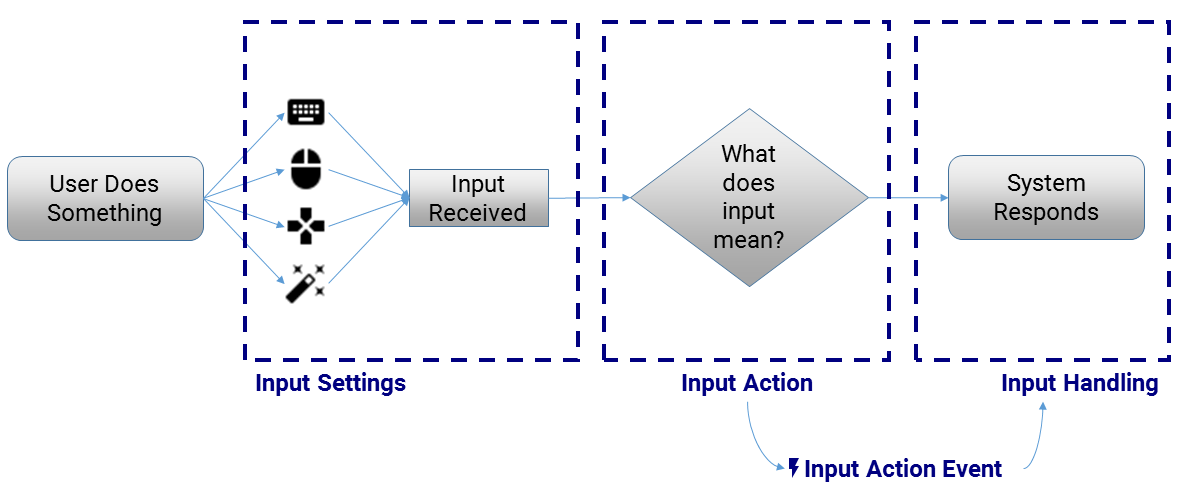

The way movement works in any VR experience (or computer game, etc.) is really straightforward:

The software needs to receive (capture) the user's input from whatever device was used. Based on the input received (what button was pressed, what thumbstick was moved, etc.) the system needs to decide what that input means ("Move the player forward", "Fire the blaster cannon", etc.). And once that decision is made, the system needs to respond and do whatever it should do ("Move the player one step forward", "Start the process of firing the blaster cannon").

Where this can get complicated is that most input devices are built and designed differently, have different hardware features, different drivers, etc. And different experiences (and even different users within the same experience!) may want to have different button layouts, controller configurations, etc. for their style of play. Trying to accommodate all of the variability that's out there can get very complicated, very fast.

Which is why we have broken this process into four key concepts:

|

Be Aware | ||

|

Not all input devices supported by the VRSimulator are reflected in the Unity Input Manager. In particular, VR-specific devices like the HTC/Vive Wands, the Oculus Remote, and the Oculus Touch are only accessible via their corresponding Unity plugin (SteamVR plugin and Oculus Utilities for Unity). The VRSimulator supports these devices through special device-specific logic which adds an easier-to-understand abstraction layer to the platform-specific APIs.

|

Input Actions are coordinated by the InputActionManager [ Configuration | Scripting ]. The InputActionManager is a singleton class which exposes the majority of its functionality via static methods. Its responsibilties are simple:

While the InputActionManager exposes a variety of static properties and methods that you can read from, you will most likely be using:

An InputAction represents a collection of inputs that correspond to the same intent. For example:

While it is possible for an InputAction to correspond to one and only one input device's button/axis, the key benefit of using InputActions is to simplify code by applying the same logic to all inputs that mean the same thing.

InputActions only expose two public methods: InputAction.registerAction() and InputAction.deregisterAction(). As the method names suggest, these methods register and deregister the InputAction with the InputActionManager.

The key component of the InputAction, however, are its properties. These properties can be divided into three categories:

The list below describes those properties which are most important for defining custom InputActions:

|

Best Practice |

|

Many InputActions will feature both keyboard, button, or axis inputs. For example, one potential InputAction would be to "move forward" based either on the keyboard's W key, the gamepad's right thumbstick, or the mouse's scroll wheel. To properly define such an InputAction, these would need to be included in the appropriate properties described above. |

|

Be Aware |

|

Technically, there is nothing stopping you from including a particular input (a UnityEngine.KeyCode, an InputAxis, or an InputButton) in multiple InputActions. This might lead to some strange behavior (because one input will actually be tied to multiple intentions), but there are certain situations where that may be desired behavior. For example, it would be perfectly reasonable to include the same input in three InputActions where one InputAction will only apply in the "normal" state, a different one will apply if your user has paused the game, and a third might apply if the user is in a "swimming" state. |

An InputAxis is used to define a single input which returns a quantitative value of its state (as opposed to a boolean, like a keyboard input). In a non-VR game, such input axes get defined in the Unity Input Manager and you can just access them using Unity's brilliant Input API.

However, one of the challenges in developing for VR is that the Unity Input Manager does not support VR-compatible input devices like the HTC/Vive Wands and Oculus Touch. They can only be accessed through the SteamVR and Oculus Utilities for Unity plugins respectively.

To address this challenge, the VRSimulator input system supports three types of InputAxis (all derive from the generic InputAxis):

Each InputAxis has a set of read-only properties which return the results (the value) of the axis at any given moment. They are fairly clearly documented.

However, when creating a custom InputAction it is important to understand the definition fields that need to be configured:

|

Be Careful! |

| If defining a generic InputAxis, it must correspond to the name set for the axis in the Unity Input Manager, otherwise it will not capture the input's state properly. |

An OculusInputAxis receives certain additional definition properties:

A SteamVRInputAxis also receives certain additional definition properties:

An InputButton is used to define a single input which returns a button state (pressed, released, held, etc.). In a non-VR game, such buttons get defined in the Unity Input Manager and you can just access them using Unity's brilliant Input API.

However, one of the challenges in developing for VR is that the Unity Input Manager does not support VR-compatible input devices like the HTC/Vive Wands and Oculus Touch. They can only be accessed through the SteamVR and Oculus Utilities for Unity plugins respectively.

To address this challenge, the VRSimulator input system supports multiple types of InputButton (all derive from the generic InputButton):

Each InputButton has a set of read-only properties which return the results (the value) of the button at any given moment. They are fairly clearly documented.

However, when creating a custom InputAction it is important to understand the definition fields that need to be configured:

A MouseButton receives certain additional definition properties:

All OculusButtons (including OculusTouchButton and OculusNearTouchButton) receives certain additional definition properties:

All SteamVRButtons (including SteamVRHairTrigger and SteamVRTouch) also receives certain additional definition properties:

The best way to modify default InputActions is to do so when the EventManager.OnInitializeInputActionsEnd event fires. At that point, all InputActions have been registered with the InputActionManager and can safely be modified without fear of being over-written by the VRSimulator's initialization logic.

There are a variety of ways to programmatically modify registered InputActions, but the way that we recommend is to:

|

Be Aware |

| The workflow described above will not work properly if you modify the InputAction's name property (this is used to uniquely identify the InputAction, after all). |

The following is an example of this workflow in action. This example adds pressing the Spacebar to the "togglePauseMenu" default InputAction:

using UnityEngine; using Immerseum.VRSimulator; public class myClass : MonoBehaviour { void OnEnable() { EventManager.OnInitializeInputActionsEnd += modifyInputAction; } void OnDisable() { EventManager.OnInitializeInputActionsEnd -= modifyInputAction; } void modifyInputAction() { InputAction actionToModify = InputActionManager.getInputAction("togglePauseMenu"); actionToModify.pressedKeyList.Add(KeyCode.Space); actionToModify.deregisterAction(); actionToModify.registerAction(); } }

Adding a new InputAction to the VRSimualtor's movement systregisterAction Methodem is relatively simple:

Below is an example that does this to create a new "PressTheKButton" InputAction:

using UnityEngine; using Immerseum.VRSimulator; public class myClass : MonoBehaviour { void Start() { InputAction myInputAction = new InputAction(); myInputAction.name = "pressTheKButton"; myInputAction.pressedKeyList.Add(KeyCode.K); myInputAction.registerAction(); } }

If you intend to replace all of the default InputActions with your own, you don't have to go through the modification workflow described above for each one. It is easier to:

The example below provides some sample code for doing so:

using UnityEngine; using Immerseum.VRSimulator; public class myClass : MonoBehaviour { void OnEnable() { EventManager.OnInitializeInputActions += createCustomInputActions; } void OnDisable() { EventManager.OnInitializeInputActions -= createCustomInputActions; } void createCustomInputActions() { InputAction firstInputAction = new InputAction(); firstInputAction.name = "togglePauseMenu"; firstInptuAction.pressedKeyList.Add(Keycode.Space); firstInputAction.registerAction(); // Repeat for additional Input Actions } }

Provided you left the Default Input Map setting enabled in the MovementManager's settings, you will now simply have different buttons activating the same movement logic.

Putting all of the above together with Custom Input Handling, it is possible to both replace all of the default Input Actions and apply your own custom movement logic. Simply: